Smart Data Management

Optimize your digital workplace through intelligent data management

Table of contents

In times of increasingly complex data streams and Big Data applications, the amount of data to be managed and its storage location are of great importance to companies. However, the type of data also plays a special role in professional data management: What information is available? How sensitive is this data? For what purpose is it needed? And how can it be made available for specific situations?

In view of the increasing digitization of the workplace, access to relevant data must also be possible from home, while traveling, or from different branch offices - for example, for cross-location digital teamwork. Archiving and file sharing are therefore two crucial topics in everyday business life and the prerequisite for successful document management.

Professional data management through data analytics and data governance

With a professional data management platform, you get an efficient solution for managing structured and unstructured data in your company and maximize the utilization potential of large volumes of data.With the help of data analytics, data from different sources can be collected and examined to establish relevant correlations. By analyzing big data, you create the basis for strong competitive advantages over your competitors.

Data governance (master data management) enables you to benefit from high data quality - by reliably managing the availability, integrity, usability and security of your company data. On the one hand, this makes it easier for you to identify and avoid risks, and on the other hand, you use corporate potential more efficiently and reduce your costs.

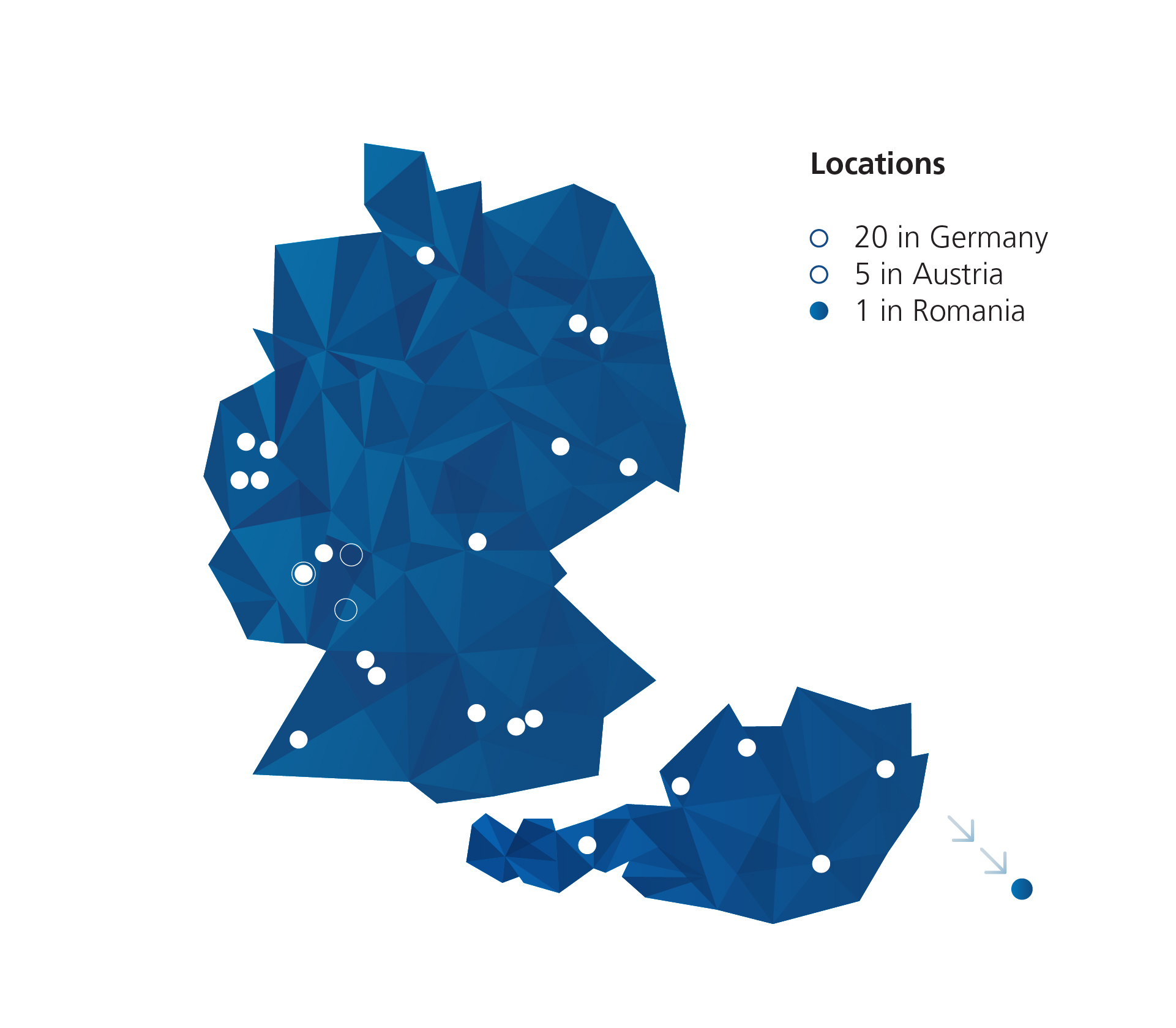

This means that nothing stands in the way of managing and collaboratively using your data. As an IT system house with many years of experience on the international market, we support you with the following solutions:

Medialine AG: Your Partner for Data Management Platforms

Take advantage of efficient data management and optimize the collaboration of your teams: We provide you with targeted advice on how to optimally manage your data according to the individual requirements of your company and support you in the selection and implementation of suitable solutions.